All Categories

Featured

Table of Contents

- – Navigating the Evolution of Online Retail: Cha...

- – The Advancement of Online Retail Systems throu...

- – How Adaptive Strategy Determines Engagement R...

- – Selecting the Right Tech Framework for Custom...

- – What Makes a High-Performing E-Commerce Plat...

- – Why Businesses Partner with Professional Dev...

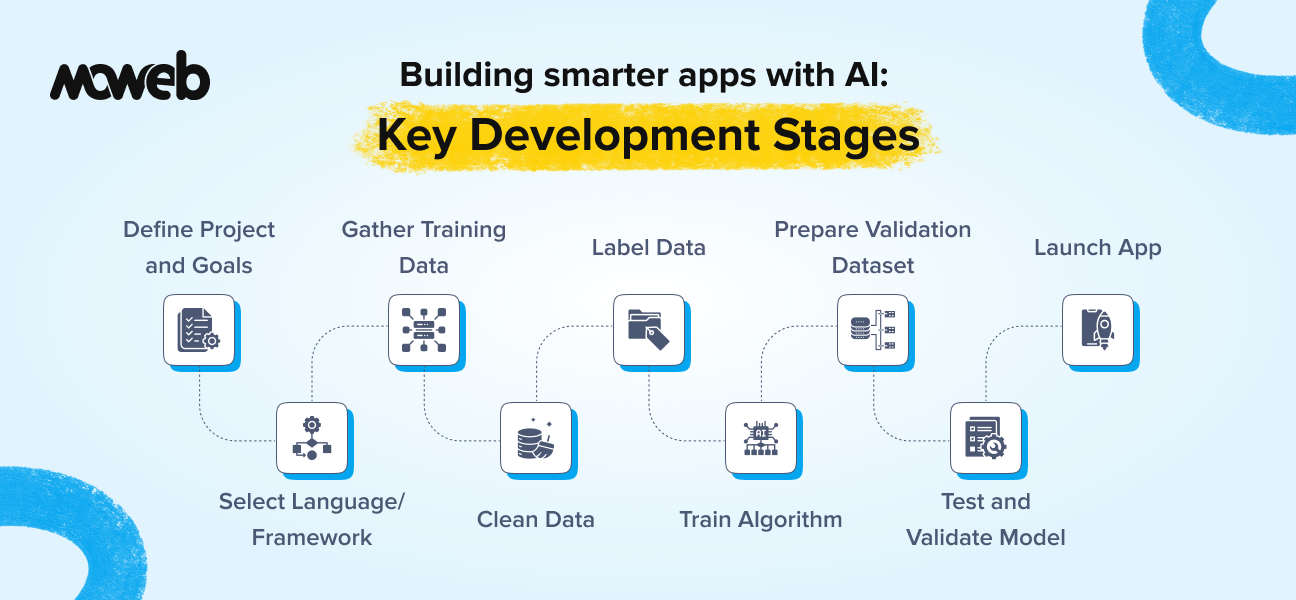

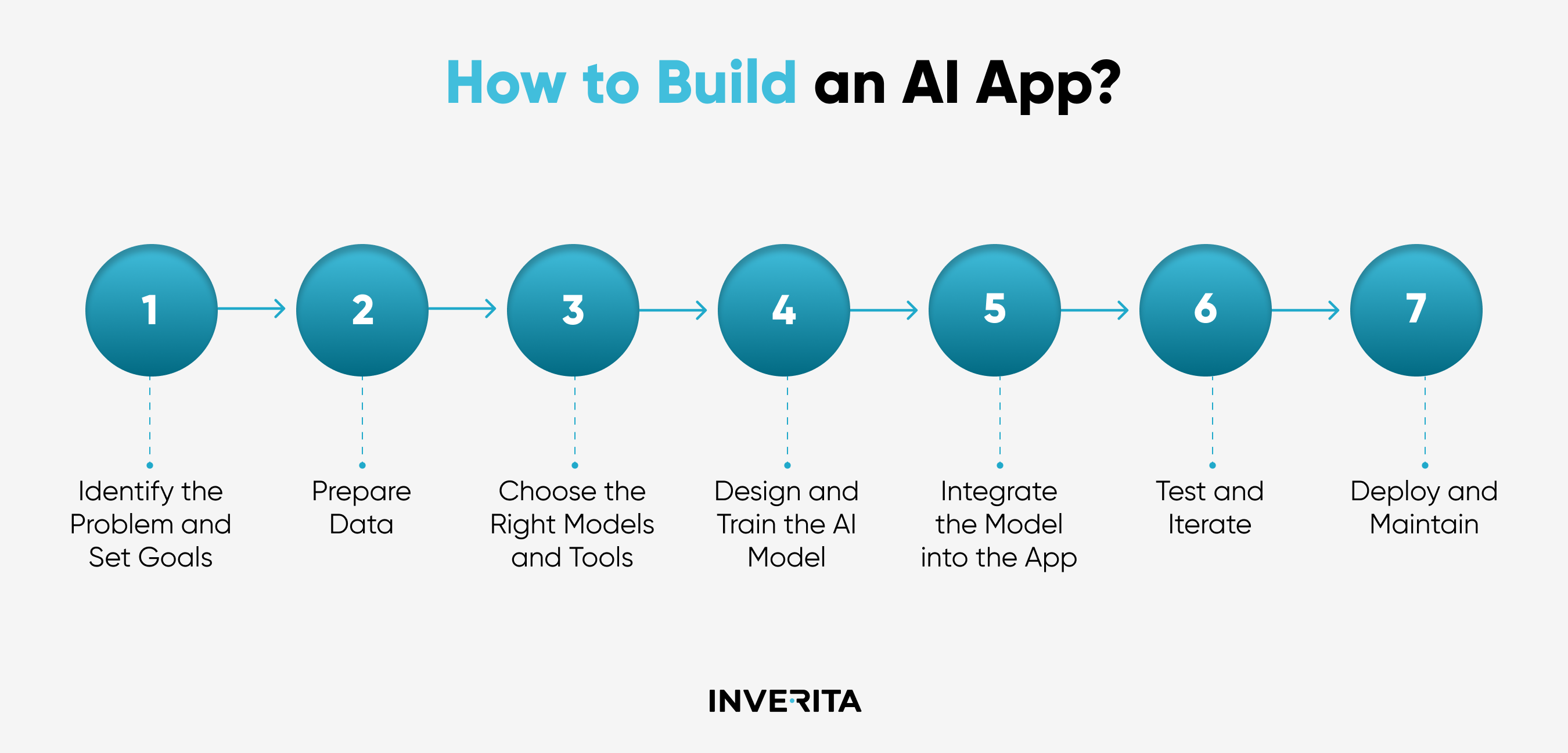

It isn't a marathon that demands research, evaluation, and experimentation to identify the duty of AI in your organization and guarantee protected, moral, and ROI-driven service deployment. It covers the vital considerations, challenges, and aspects of the AI project cycle.

Your objective is to identify its function in your operations. The most convenient means to approach this is by stepping from your objective(s): What do you wish to accomplish with AI execution? Assume in regards to exact problems and measurable results. Half of AI-mature companies rely on a combination of technological and business metrics to analyze the ROI of executed AI usage situations.

Navigating the Evolution of Online Retail: Changes with Outlooks

Seek usage instances where you've already seen a persuading demo of the modern technology's possibility. In the finance sector, AI has actually verified its quality for fraudulence discovery. Equipment knowing and deep learning models outperform conventional rules-based scams discovery systems by using a reduced price of incorrect positives and revealing better lead to identifying brand-new kinds of fraud.

Scientists agree that synthetic datasets can raise personal privacy and representation in AI, specifically in delicate industries such as healthcare or money. Gartner predicts that by 2024, as much as 60% of data for AI will certainly be synthetic. All the obtained training data will then have to be pre-cleansed and cataloged. Use constant taxonomy to establish clear data lineage and after that keep track of exactly how various individuals and systems utilize the provided data.

The Advancement of Online Retail Systems through Intelligent Features

Furthermore, you'll need to separate available data into training, validation, and examination datasets to benchmark the established model. Fully grown AI development teams complete many of the information monitoring processes with information pipelines a computerized series of steps for data consumption, processing, storage, and succeeding accessibility by AI models. Instance of data pipe architecture for data warehousingWith a robust information pipe architecture, firms can process countless information documents in milliseconds in close to real-time.

Amazon's Supply Chain Finance Analytics group, subsequently, optimized its data design workloads with Dremio. With the current setup, the firm set new extract transform load (ETL) work 90% faster, while inquiry speed raised by 10X. This, in turn, made data extra accessible for hundreds of concurrent individuals and equipment discovering jobs.

How Adaptive Strategy Determines Engagement Rates

The training procedure is complicated, also, and susceptible to concerns like sample effectiveness, stability of training, and tragic interference issues, amongst others. Successful business applications are still few and mostly come from Deep Tech business. are the backbone of generative AI. By utilizing a pre-trained, fine-tuned design, you can swiftly train a new-gen AI algorithm.

Unlike typical ML frameworks for natural language handling, foundation designs call for smaller labeled datasets as they already have actually embedded expertise during pre-training. That claimed, foundation designs can still produce incorrect and inconsistent outcomes. Especially when applied to domain names or tasks that vary from their training information. Educating a foundation version from scrape likewise requires massive computational sources.

Selecting the Right Tech Framework for Custom Project Objectives

happens when design training problems vary from release conditions. Efficiently, the model doesn't generate the wanted lead to the target setting because of distinctions in criteria or arrangements. takes place when the statistical homes of the input data alter over time, affecting the model's performance. For instance, if the model dynamically optimizes rates based on the total variety of orders and conversion rates, yet these parameters considerably transform with time, it will certainly no more give precise tips.

Rather, most preserve a data source of model versions and do interactive version training to considerably improve the high quality of the last product., and just 11% are effectively deployed to production.

Then, you benchmark the communications to identify the design variation with the highest possible accuracy. is one more vital practice. A design with also couple of features has a hard time to adjust to variants in the data, while as well many features can cause overfitting and even worse generalization. Highly correlated features can likewise trigger overfitting and deteriorate explainability methods.

What Makes a High-Performing E-Commerce Platform in Today's Market

Yet it's likewise the most error-prone one. Just 32% of ML projectsincluding refreshing designs for existing deploymentstypically get to release. Deployment success throughout different machine finding out projectsThe factors for stopped working implementations vary from lack of executive assistance for the project due to uncertain ROI to technical difficulties with ensuring steady version procedures under increased lots.

The team needed to guarantee that the ML model was extremely offered and offered very individualized recommendations from the titles readily available on the individual tool and do so for the platform's numerous users. To make sure high efficiency, the team chose to program version scoring offline and afterwards serve the results once the user logs into their gadget.

Why Businesses Partner with Professional Development Services for Their Digital Needs

Ultimately, effective AI model deployments boil down to having effective procedures. Simply like DevOps concepts of constant combination (CI) and continuous distribution (CD) boost the implementation of regular software application, MLOps increases the speed, performance, and predictability of AI design deployments.

Table of Contents

- – Navigating the Evolution of Online Retail: Cha...

- – The Advancement of Online Retail Systems throu...

- – How Adaptive Strategy Determines Engagement R...

- – Selecting the Right Tech Framework for Custom...

- – What Makes a High-Performing E-Commerce Plat...

- – Why Businesses Partner with Professional Dev...

Latest Posts

Reputation Restoration Tactics for Mental Health Professionals

Integrated Strategy for Wellness Practices

Modern Content Management: A Breakthrough for Dynamic Web Applications

More

Latest Posts

Reputation Restoration Tactics for Mental Health Professionals

Integrated Strategy for Wellness Practices

Modern Content Management: A Breakthrough for Dynamic Web Applications